Be a part of our every day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. learn more

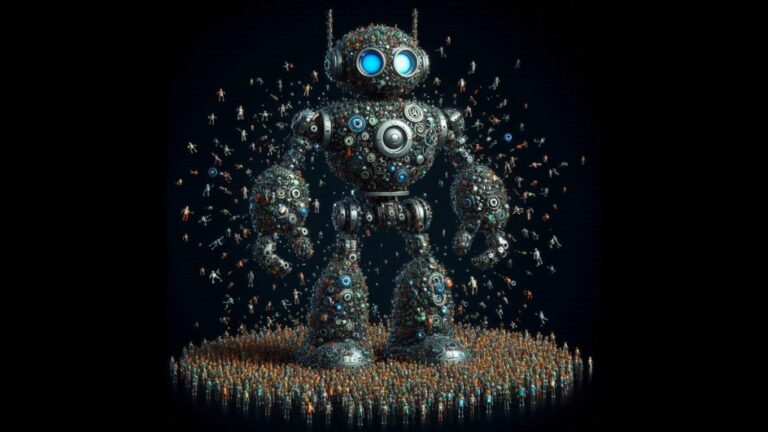

Combination of Consultants (MoE) has grow to be a preferred approach to scale massive language fashions (LLM) with out rising computational value. Reasonably than devoting your complete mannequin capability to every enter, the MoE structure routes knowledge to small however specialised “skilled” modules. MoE allows LL.M.s to extend parameters whereas retaining reasoning prices low. The MoE is used for numerous standard LL.M.s, together with mix, Database reception, Gronk and It is said GPT-4.

Nevertheless, present MOE know-how has its limitations and is proscribed to a comparatively small variety of consultants. in a new articleGoogle DeepMind launched Parametric Environment friendly Knowledgeable Retrieval (PEER), a novel structure that may scale MoE fashions to thousands and thousands of consultants, additional enhancing the efficiency computing trade-offs of enormous language fashions.

Challenges in scaling up the LL.M.

The previous few years have proven that extending language fashions by rising the variety of parameters can enhance efficiency and add new options. Nevertheless, there’s a restrict to how a lot a mannequin can scale earlier than operating into issues. Compute and memory bottlenecks.

Each Transformer block LL.M. makes use of consideration layers and feedforward (FFW) layers. The eye layer computes the connection between token sequences fed to the transformer block. The feedforward community is answerable for storing the data of the mannequin. The FFW layer accounts for two-thirds of the mannequin parameters and is among the bottlenecks of the scaling Transformer. Within the basic Transformer structure, all parameters of FFW are used for inference, which makes their computational footprint proportional to their measurement.

MoE makes an attempt to unravel this problem by changing FFW with sparsely enabled skilled modules moderately than a single dense FFW layer. Every specialist comprises a small subset of the entire dense layer parameters and focuses on sure areas. The Division of Schooling has a router that distributes every enter to a number of consultants who’re probably to offer essentially the most correct solutions.

By rising the variety of consultants, the Ministry of Schooling can enhance the capability of the LL.M. with out rising the computational value of operating it.

Discover the appropriate MoE granularity degree

In keeping with current analysis, the optimum variety of consultants for a MoE mannequin is expounded to a number of components, together with the variety of coaching tokens and the computational price range. When these variables are balanced, MoE persistently outperforms dense fashions for a similar quantity of computing sources.

As well as, the researchers discovered that rising the “granularity” (referring to the variety of consultants) of the MoE mannequin can result in efficiency enhancements, particularly because the mannequin measurement and coaching knowledge enhance.

Excessive-granularity MoE additionally allows the mannequin to study new data extra effectively. Some research have proven that by including new consultants and normalizing them appropriately, MoE fashions can adapt to a steady movement of knowledge, which will help language fashions deal with altering knowledge within the atmosphere through which they’re deployed.

The present Division of Schooling strategy is proscribed and never scalable. For instance, they typically have fastened routers which might be designed for a particular variety of consultants and should be readjusted when new consultants are added.

Parameter environment friendly skilled retrieval

DeepMind’s Parametric Environment friendly Knowledgeable Retrieval (PEER) structure solves the problem of scaling MoE to thousands and thousands of consultants. PEER replaces fastened routers with realized indexes to effectively route enter knowledge to numerous consultants. For every given enter, PEER first makes use of fast preliminary calculations to construct a shortlist of potential candidates earlier than deciding on and launching high consultants. This mechanism permits the Ministry of Schooling to deal with massive numbers of consultants with out slowing down.

In contrast to earlier MoE architectures, the place consultants had been sometimes as massive because the FFW layer they changed, PEER makes use of small consultants with a single neuron within the hidden layer. This design allows the mannequin to share hidden neurons amongst consultants, thereby enhancing data switch and parameter effectivity. To compensate for the issue of small skilled measurement, PEER makes use of a multi-head retrieval methodology, much like the multi-head consideration mechanism used within the Transformer mannequin.

PEER layers will be added to present transformer fashions or used to interchange FFW layers. PEER additionally works with Effective fine-tuning of parameters (PEFT) know-how. Within the PEFT approach, parameter effectivity refers back to the variety of parameters modified to fine-tune the mannequin for brand spanking new duties. In PEER, parameter effectivity reduces the variety of energetic parameters within the MoE layer, which instantly impacts the computational and startup reminiscence consumption throughout pre-training and inference.

In keeping with the paper, PEER could also be appropriate for choosing PEFT adapters at runtime, permitting new data and performance to be dynamically added to the LLM.

PEER could also be utilized in DeepMind’s Gemini 1.5 mannequin, in accordance with Google Blog Utilizing a “new Mixture of Consultants (MoE) structure”.

Friends in motion

The researchers evaluated PEER’s efficiency on totally different benchmarks and in contrast it with transformer fashions with dense feedforward layers and different MoE architectures. Their experiments present that the PEER mannequin achieves higher efficiency computation trade-offs, attaining decrease perplexity scores on the similar computational price range as its friends.

The researchers additionally discovered that rising the variety of consultants within the PEER mannequin additional diminished confusion.

“This design demonstrates superior computational efficiency trade-offs in our experiments, positioning it as a aggressive different to dense FFW layers for extending base fashions,” the researchers wrote.

These findings are attention-grabbing as a result of they problem the long-held perception that the Ministry of Schooling mannequin is only with a restricted variety of consultants. PEER exhibits that by making use of the appropriate retrieval and routing mechanisms, MoE will be scaled to thousands and thousands of consultants. This strategy will help additional cut back the associated fee and complexity of coaching and serving very massive language fashions.

Source link