Be a part of our each day and weekly newsletters for the most recent updates and unique content material on industry-leading AI protection. learn more

this Allen Institute for Artificial Intelligence (Ai2) today unveiled Molmoan open supply household of state-of-the-art multi-modal synthetic intelligence fashions that outperforms high proprietary rivals on a number of third-party benchmarks, together with OpenAI’s GPT-4o, Anthropic’s Claude 3.5 Sonnet, and Google’s Gemini 1.5 .

Consequently, these fashions can settle for and analyze photographs uploaded to them, just like main proprietary base fashions.

Nonetheless, Ai2 additionally pointed out in X’s post Molmo makes use of “1,000 instances much less knowledge” than proprietary rivals – due to some intelligent new coaching strategies, that are described in additional element beneath and in a technical report paper printed by Molmo Founded by Paul Allen and an organization led by Ali Farhadi.

Ai2 mentioned the launch underscores its dedication to open analysis, making high-performance fashions accessible with open weights and knowledge to the broader group, and naturally firms in search of full possession, management and customization of options.

Two weeks in the past, Ai2 launched one other open mannequin; Orr Ministry of Educationwhich is a “specialist combine” or a mixture of smaller fashions designed for cost-effectiveness.

Bridging the hole between open and proprietary AI

Molmo consists of 4 principal fashions with completely different parameter sizes and capabilities:

- Molmo-72B (72 billion parameters, or settings – flagship mannequin, based mostly on Alibaba Cloud’s Qwen2-72B open supply mannequin)

- Molmo-7B-D (“Demonstration Mannequin” based mostly on Alibaba’s Qwen2-7B mannequin)

- Molmo-7B-O (OLMo-7B mannequin based mostly on Ai2)

- MolmoE-1B (based mostly on the OLMoE-1B-7B Skilled Hybrid LLM, which Ai2 says “nearly matches the efficiency of GPT-4V by way of educational benchmarks and person preferences.”)

These fashions obtain excessive efficiency on a spread of third-party benchmarks, outperforming many proprietary options. They’re each accessible underneath the permissive Apache 2.0 license and can be utilized for nearly any kind of analysis and business use (e.g., enterprise stage).

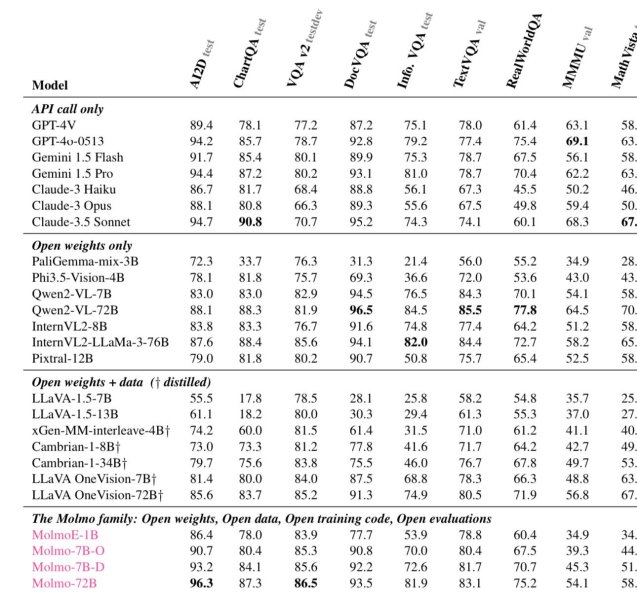

Notably, Molmo-72B leads in educational evaluations, reaching high scores throughout 11 key benchmarks, and ranks second in person choice, simply behind GPT-4o.

Vaibhav Srivastav, machine studying improvement advocacy engineer at Hugging Face, an AI code storage firm, commented Publish on Xemphasizing that Molmo gives a robust various to closed techniques and units a brand new normal for open multi-modal synthetic intelligence.

Moreover, Google DeepMind robotics researcher Ted Shaw takes to the X Praising the pointing knowledge included in Molmo, he considers it a game-changer for the idea of robotic imaginative and prescient.

This characteristic permits Molmo to supply visible explanations and work together extra effectively with the bodily atmosphere, a characteristic at the moment missing in most different multimodal fashions.

These fashions should not solely high-performance, but additionally utterly open, permitting researchers and builders to entry and construct cutting-edge applied sciences.

Superior mannequin structure and coaching strategies

Molmo’s structure is designed to maximise effectivity and effectiveness. All fashions use OpenAI’s ViT-L/14 336px CLIP mannequin as a visible encoder to course of multi-scale, multi-cropped photographs into visible markers.

These tokens are then projected into the enter area of the language mannequin by means of a multilayer perceptron (MLP) connector and aggregated for dimensionality discount.

The Language Mannequin Aspect is a decoder-only Transformer, with choices starting from the OLMo sequence to the Qwen2 and Mistral sequence, every providing completely different capacities and openness ranges.

Molmo’s coaching technique consists of two key phases:

- Multi-modal pre-training: At this stage, the mannequin is skilled to supply captions utilizing newly collected detailed picture descriptions offered by human annotators. This high-quality knowledge set, known as PixMo, is a key consider Molmo’s excellent efficiency.

- Supervise fine-tuning: The mannequin is then fine-tuned on a mixture of completely different datasets, together with normal educational benchmarks and newly created datasets, enabling the mannequin to deal with advanced real-world duties comparable to doc studying, visible reasoning, and even pointing.

Not like many modern fashions, Molmo doesn’t depend on reinforcement studying with human suggestions (RLHF), however as an alternative focuses on a fastidiously tuned coaching pipeline that updates all mannequin parameters based mostly on their pre-training state.

Outperform on key benchmarks

Molmo fashions present spectacular outcomes on a number of benchmarks, particularly in comparison with proprietary fashions.

For instance, Molmo-72B scored 96.3 on DocVQA and 85.5 on TextVQA, outperforming the Gemini 1.5 Professional and Claude 3.5 Sonnet in these classes. It additional outperforms GPT-4o on AI2D (the abbreviation for Ai2’s personal benchmark)One chart is worth a dozen pictures”, a set of greater than 5,000 elementary college science diagrams and greater than 150,000 wealthy annotations)

These fashions additionally carry out nicely in fundamental imaginative and prescient duties, with Molmo-72B reaching high efficiency on RealWorldQA, making its functions in robotics and sophisticated multi-modal reasoning notably promising.

Open entry and future releases

Ai2 makes these fashions and datasets accessible on its hugging face spacetotally appropriate with widespread AI frameworks comparable to Transformers.

This open entry is a part of Ai2’s broader imaginative and prescient to foster innovation and collaboration within the AI group.

Within the coming months, Ai2 plans to launch extra expanded variations of fashions, coaching code, and technical experiences to additional enrich the assets accessible to researchers.

For these focused on exploring Molmo’s capabilities, a public demo and several other mannequin checkpoints at the moment are accessible through Momo’s official page.

Source link