Getty Photographs

Getty PhotographsIt is the perennial “cocktail get together drawback” – standing in a room full of individuals, drinks in hand, making an attempt to listen to what the opposite visitors are saying.

Actually, people are superb at having a dialog with one particular person whereas filtering out competing voices.

Maybe surprisingly, nonetheless, this ability has been unimaginable to duplicate till not too long ago.

That is necessary relating to utilizing audio proof in court docket instances. Voices within the background could make it troublesome to find out who’s talking and what’s being stated, which might render the recording ineffective.

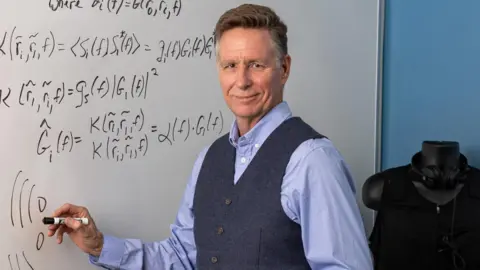

Electrical engineer Keith McElveen, founder and chief expertise officer of Wave Sciences, turned all in favour of the issue whereas engaged on struggle crimes instances for the U.S. authorities.

“We have been looking for out who ordered the bloodbath of civilians. Among the proof included a bunch of recordings of voices talking on the identical time – that is once I discovered what the ‘cocktail get together drawback’ was,” he stated.

“I’ve efficiently eliminated noises resembling automobile sounds, air conditioners or followers from speech, however once I began making an attempt to take away speech from speech, it turned out that not solely was it a really troublesome drawback, nevertheless it was one of many traditional puzzles in Acoustics.

“The sound reverberated across the room and was mathematically troublesome to resolve.”

Paul Cheney

Paul CheneyThe reply, he says, is to make use of synthetic intelligence to pinpoint and filter all competing sounds primarily based on the unique sounds within the room.

This does not simply imply different individuals who could also be talking – the way in which the sound bounces across the room can even create a number of interference, with the goal speaker’s voice being heard immediately or not directly.

in excellent silence In a room – a totally echo-free room – one microphone per speaker is sufficient to decide up what everyone seems to be saying; however in an actual room, this drawback additionally requires a microphone to deal with each mirrored sound.

Mr. McElveen based Wave Sciences in 2009 with the hope of growing expertise that might separate overlapping sounds. Initially, the corporate used giant numbers of microphones in what’s referred to as array beamforming.

Nevertheless, suggestions from potential industrial companions was that the system required too many microphones to get good ends in many conditions, and in lots of others merely did not work.

“The frequent chorus was that if we might give you options to those issues, they’d be very ,” Mr. McElveen stated.

And, he added: “We knew there needed to be an answer as a result of you are able to do it with simply two ears.”

The corporate lastly solved the issue after 10 years of internally funded analysis and submitted a patent software in September 2019.

Keith McElwain

Keith McElwainThey got here up with a synthetic intelligence that analyzes how sounds bounce round a room earlier than reaching a microphone or ear.

“We seize the sound because it hits every microphone, backtrack to determine the place it got here from, after which, basically, we suppress any sound that could not probably come from the place the particular person is sitting,” McElwain stated Mr.

In some methods, this impact is similar to what occurs when a digital camera focuses on one topic and blurs the foreground and background.

“When you may solely be taught utilizing very noisy recordings, the outcomes do not sound clear, however they’re nonetheless beautiful.”

The expertise was used for the primary time in real-world forensics in a U.S. homicide case, offering proof that proved essential to a conviction.

After two hit males are arrested for killing a person, the FBI desires to show they have been employed by a household going by means of a baby custody dispute. The FBI organized to trick the household into believing they have been being blackmailed for his or her involvement, then sit again and watch their reactions.

Whereas the FBI can simply acquire textual content messages and telephone calls, in-person Assembly at two eating places is one other story. However the court docket licensed using Wave Sciences’ algorithm, which meant the audio went from inadmissible to important proof.

Since then, it has been subjected to a sequence of assessments by different authorities laboratories, together with within the UK. The corporate is at present advertising and marketing the expertise to the U.S. army, which already makes use of it to investigate sonar indicators.

McElveen stated it is also utilized to hostage negotiations and suicide situations to make sure each side of the dialog are heard, not simply the negotiator with a bullhorn.

Late final yr, the corporate launched a software program software utilizing its studying algorithm to be used by authorities labs performing audio forensics and acoustic evaluation.

Getty Photographs

Getty PhotographsFinally, it goals to launch personalized variations of its merchandise to be used in recording kits, automobile voice interfaces, sensible audio system, augmented and digital actuality, sonar and assistive listening to gadgets.

So, for instance, if you happen to converse into your automobile or sensible speaker, it would not matter if there’s a number of noise round you, the gadget will nonetheless be capable of hear what you are saying.

Terri Armenta, a forensic educator on the Academy of Forensic Sciences, stated synthetic intelligence is already being utilized to different areas of forensic science.

“ML [machine learning] The mannequin analyzes vocal patterns to find out the speaker’s identification, a course of that’s notably helpful in prison investigations the place vocal proof must be verified,” she stated.

“Moreover, AI instruments can detect manipulation or alterations in recordings, guaranteeing the integrity of proof introduced in court docket.”

Synthetic intelligence has additionally entered different facets of audio evaluation.

Bosch

BoschBosch has a expertise referred to as SoundSee, which makes use of audio sign processing algorithms to investigate the sound of motors, and so on., to foretell failures earlier than they happen.

“Conventional audio sign processing capabilities lack the flexibility to grasp sound the way in which we people do,” stated Dr. Samarjit Das, director of analysis and expertise at Bosch USA.

“Audio AI allows a deeper understanding and semantic interpretation of the sounds of issues round us—for instance, ambient sounds or acoustic cues emitted by machines—higher than ever earlier than.”

Latest assessments of Wave Sciences’ algorithms present that the expertise can carry out in addition to the human ear even with simply two microphones, and even higher if extra microphones are added.

In addition they revealed different issues.

“The maths in all our assessments reveals placing similarities to human listening to. There’s nothing bizarre about what our algorithm can do and the way correct it’s, it is strikingly much like a number of the weirdness that exists in human listening to,” “McElveen stated.

“We suspect that the human mind might use the identical arithmetic—and we might have stumbled upon what’s actually occurring within the mind when fixing the cocktail get together drawback.”