Be part of our day by day and weekly newsletters for the newest updates and unique content material on industry-leading AI protection. learn more

Microsoft will not be counting on the success of its synthetic intelligence collaboration with OpenAI.

No, removed from it. As an alternative, the corporate, often called Redmond due to its headquarters in Washington state, as we speak introduced 3 new fashions in its rising Phi household of language/multimodal synthetic intelligence.

Three new Phi 3.5 fashions include 3.82 billion parameters Phi-3.5-Mini Instructions41.9 billion parameters Phi-3.5-MoE-Directiveand 4.15 billion parameters Phi-3.5-Visual Guidanceevery designed for fundamental/quick reasoning, extra highly effective reasoning, and imaginative and prescient (picture and video evaluation) duties respectively.

All three fashions can be found for builders to obtain, use and fine-tune customization Face hugging in a Microsoft branded MIT license Unrestricted business use and modification is permitted.

Surprisingly, all three fashions have near-state-of-the-art efficiency on many third-party benchmarks, even beating different AI distributors in some circumstances, together with Google’s Gemini 1.5 Flash, Meta’s Llama 3.1 , even beating OpenAI’s GPT-4o in some circumstances.

This efficiency, coupled with the permissive open license, has folks praising Microsoft on Social Community X:

Right this moment let’s take a short take a look at every new mannequin based mostly on the discharge notes posted on Hugging Face

Phi-3.5 Mini Instruct: Optimized for compute-constrained environments

The Phi-3.5 Mini Instruct mannequin is a light-weight AI mannequin with 3.8 billion parameters, designed to observe directions, and helps 128k token context size.

The mannequin is good for situations that require robust reasoning capabilities in reminiscence or compute-constrained environments, together with duties comparable to code technology, mathematical downside fixing, and logic-based reasoning.

Regardless of its compact measurement, the Phi-3.5 Mini Instruct mannequin demonstrates aggressive efficiency in multilingual and multiturn dialogue duties, reflecting vital enhancements over its predecessor.

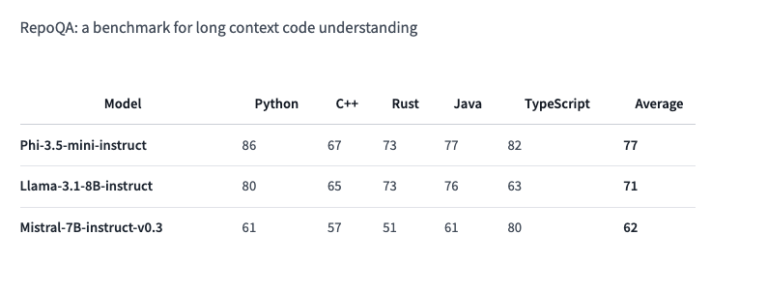

It has near-state-of-the-art efficiency on many benchmarks and outperforms different equally sized fashions (Llama-3.1-8B-instruct and Mistral-7B-instruct) on the RepoQA benchmark, which measures “long-context code understanding.” .

Phi-3.5 MoE: Microsoft’s “Portfolio of Consultants”

The Phi-3.5 MoE (Mixture of Consultants) mannequin seems to be the corporate’s first of its form, combining a number of completely different mannequin sorts into one, every specializing in numerous duties.

The mannequin leverages an structure with 42 billion energetic parameters and helps 128k token context size to offer scalable AI efficiency for demanding purposes. Nevertheless, in response to HuggingFace documentation, it could solely be run with 6.6B exercise parameters.

Phi-3.5 MoE is designed to carry out effectively throughout quite a lot of inference duties, delivering robust efficiency in code, arithmetic, and multi-language understanding, usually outperforming bigger fashions on particular benchmarks, together with RepoQA:

It additionally impressively beats GPT-4o mini on 5-sample MMLU (Large Multi-task Language Understanding), involving topics of various skilled ranges comparable to STEM, humanities, social sciences, and so forth.

The distinctive structure of the MoE mannequin allows it to keep up effectivity whereas dealing with complicated synthetic intelligence duties throughout a number of languages.

Phi-3.5 Visible Steering: Increased-Order Multimodal Reasoning

Finishing the trio is the Phi-3.5 Imaginative and prescient Instruct mannequin, which integrates textual content and picture processing capabilities.

This multi-modal mannequin is especially appropriate for duties comparable to common picture understanding, optical character recognition, chart and desk understanding, and video summarization.

Like different fashions within the Phi-3.5 collection, Imaginative and prescient Instruct helps 128k token context size, enabling it to handle complicated multi-frame imaginative and prescient duties.

Microsoft emphasizes that the mannequin is skilled on a mixture of artificial and filtered publicly obtainable datasets, with a deal with high-quality, inference-dense datasets.

Coaching a brand new Phi trio

The Phi-3.5 Mini Instruct mannequin was skilled on 3.4 trillion tokens in 10 days utilizing 512 H100-80G GPUs, whereas the Imaginative and prescient Instruct mannequin was skilled on 500 billion tokens in 6 days utilizing 256 A100-80G GPUs.

The Phi-3.5 MoE mannequin makes use of a hybrid knowledgeable structure and was skilled on 4.9 trillion tokens in 23 days utilizing 512 H100-80G GPUs.

Open supply underneath MIT license

All three Phi-3.5 fashions can be found underneath the MIT license, demonstrating Microsoft’s dedication to supporting the open supply neighborhood.

This license permits builders to freely use, modify, merge, publish, distribute, sublicense, or promote copies of the software program.

The license additionally features a disclaimer that the software program is supplied “as is” with out guarantee of any form. Microsoft and different copyright holders disclaim any legal responsibility for any claims, damages, or different legal responsibility which will come up from the usage of this software program.

Microsoft’s launch of the Phi-3.5 collection represents an essential step ahead within the growth of multi-language and multi-modal synthetic intelligence.

By making these fashions obtainable underneath an open supply license, Microsoft allows builders to combine cutting-edge synthetic intelligence capabilities into their purposes, thereby selling innovation in enterprise and analysis.

Source link